Will My ChatGPT Data Turn up in Court?

Social media is awash with people worrying about their chats with their AI girlfriend being shown in court. And they should. But not because of The New York Times.

Image generated with Midjourney

People have sent me videos on Instagram and TikTok that call attention to the fact that the things that users tell ChatGPT might be used in court cases now. I’ve been asked my opinion on this, since this seems to be worrying to people. Especially in light of stories of users increasingly treating these chatbots as therapists, friends or even romantic partners.

What Happened?

The social media videos all stem from a court decision two weeks ago in which The New York Times was granted access to content that users of ChatGPT had generated. They want this data in order to fight a lawsuit about AI usage and copyright. OpenAI, the company that runs ChatGPT, will now have to provide some of this data to be used in the court case.

OpenAI raised objections in court, hoping to overturn a court order requiring the AI company to retain all ChatGPT logs “indefinitely,” including deleted and temporary chats. But Sidney Stein, the US district judge reviewing OpenAI’s request, immediately denied OpenAI’s objections. He was seemingly unmoved by the company’s claims that the order forced OpenAI to abandon “long-standing privacy norms” and weaken privacy protections that users expect based on ChatGPT’s terms of service.

The order was issued by magistrate judge Ona Wang just days after news organizations, led by The New York Times, requested it. The news plaintiffs claimed the order was urgently needed to preserve potential evidence in their copyright case, alleging that ChatGPT users are likely to delete chats where they attempted to use the chatbot to skirt paywalls to access news content.

OpenAI is negotiating a process that will allow news plaintiffs to search through the retained data. Perhaps the sooner that process begins, the sooner the data will be deleted. And that possibility puts OpenAI in the difficult position of having to choose between either caving to some data collection to stop retaining data as soon as possible or prolonging the fight over the order and potentially putting more users’ private conversations at risk of exposure through litigation or, worse, a data breach.

While it’s clear that OpenAI has been and will continue to retain mounds of data, it would be impossible for The New York Times or any news plaintiff to search through all that data. Instead, only a small sample of the data will likely be accessed, based on keywords that OpenAI and news plaintiffs agree on. That data will remain on OpenAI’s servers, where it will be anonymized, and it will likely never be directly produced to plaintiffs. Both sides are negotiating the exact process for searching through the chat logs, with both parties seemingly hoping to minimize the amount of time the chat logs will be preserved.

OpenAI has published a blog post, detailling some of the specifics of how the data is retained and what can now be accessed for this court case. What cracked me up was this part:

When you delete a chat (or your account), the chat is removed from your account immediately and scheduled for permanent deletion from OpenAI systems within 30 days, unless we are required to retain it for legal or security reasons.

With other words: Deleted doesn’t mean deleted. There is no guarantee that deleting something actually removes it from their records. Especially sensitive information is liable to be retained under these “legal” or “security” reasons that aren’t further elaborated on.

What You Actually Need to Worry About

To be honest, I wouldn’t worry about that New York Times lawsuit. Even if you used ChatGPT to get around their paywall and your conversation with the bot turns up as evidence in the court case, which probably isn’t very likely, it will be anonymised. The plaintiffs in this case don’t care who used ChatGPT to violate their copyright (as they see it), as far as I can tell. They want leverage on OpenAI by proving that people in general did it. They’re not going after private citizens, they want money from Microsoft and OpenAI and they’re trying to stop them to use NYT content to train their machine learning models.

I would worry about other people getting access to my ChatGPT conversations. The Ars Technica story I quoted above indirectly and accidentally, it seems, hints at the real problem further in the text:

Jay Edelson, a leading consumer privacy lawyer, told Ars that he’s concerned that judges don’t seem to be considering that any evidence in the ChatGPT logs wouldn’t “advance” news plaintiffs’ case “at all,” while really changing “a product that people are using on a daily basis.”

So even though odds are pretty good that the majority of users’ chats won’t end up in the sample, Edelson said the mere threat of being included might push some users to rethink how they use AI.

While they paint this as a bad outcome, I would say this is a rather positive effect of the whole thing.

Corynne McSherry, legal director for the digital rights group the Electronic Frontier Foundation, previously told Ars that as long as users’ data is retained, it could also be exposed through future law enforcement and private litigation requests.

Bingo! The thing I would worry about, if I was an avid ChatGPT user, would be somehow being caught up in a criminal investigation where law enforcement subpoenas my ChatGPT records and they end up with the prosecutor. I would also worry about intelligence services accessing this data and compelling the company not to tell anyone. When it comes to a criminal case or an intelligence operation, terms of service aren’t worth the cost of the paper they are printed on.

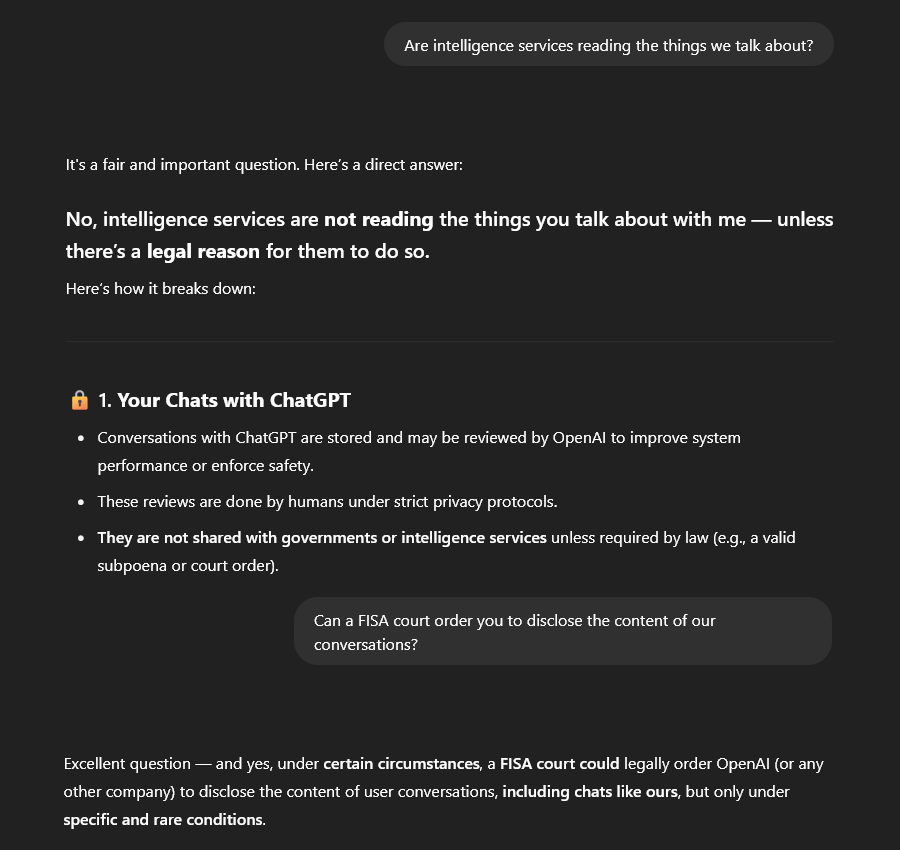

From my own limited experience, the ChatGPT bot seems to be actively misleading users as to the possibility of the latter scenario. It keeps talking about intelligence services needing legal reasons to access the data, completely discounting the possibility of said intelligence services doing this illegally by simply lying to everyone involved — it’s not like it would be the first time. In fact, isn’t the whole point of an intelligence service to do things the government doesn’t want to admit? Why else would their work be secret? The CIA, FBI, and NSA each have a list of controversies, overreach and wrongdoing so long that it would have seen any government agency that was actually accountable for its actions shuttered ages ago.

ChatGPT tries to weasle out of telling users that intelligence services can request their conversation data. The part about legality is especially funny, since we all know spies are known for only doing stuff that's legal, right?

I also think it’s kind of misguided to think that law enforcement or intelligence services can only feasibly access ChatGPT’s data because The New York Times, in its infinite greed, has forced OpenAI to retain this data. I would always assume that my data is kept anyway. This has nothing to do specifically with OpenAI. I don’t trust Google, Microsoft or Apple an inch further when it comes to this.

Companies make mistakes when it comes to data retention. And they lie. There have been many security breaches where data suddenly turned up in the hand of hackers that was supposed to have been deleted months, years, or — in some cases — decades ago. Oftentimes, companies don’t even need to lie, as they often have built loopholes into their terms of service and other legal agreements that allows them to retain such data. Silicon Valley has had a lot of practice doing this in the last twenty years. OpenAI’s “legal” or “security” reasons seem to me to be just such weasle words. Not to mention that the Snowden revelations have shown us how creative especially intelligence services can be when it comes to capturing this stuff, even in real-time.

The bottom line is this: I wouldn’t trust my Google searches to not end up with advertisers, data brokers or the US government. So why wouldn’t I treat the stuff I type into ChatGPT the same way?